Latest Posts

Technological innovation From PCIe 4.0 vs 5.0

PCIe 4.0 vs 5.0 is relying on the early evolution of PCIe and the key development of the applications. What’s more, the demand for data traffic and technical capabilities promote each other. So does the capabilities of computing, networking and storage. They have grown exponentially, creating a prosperous ecological environment. By this article, we will look forward to the future evolution of PCIe. What we will see will not be just a doubling of bandwidth, but more novel ideas and grander visions.

From PCIe 4.0 vs 5.0

What happened in the application area between 2017 and 2019 is the new workloads, primarily AI/ML and cloud-based. As it shifted the focus of virtualization from one server running multiple processes to multiple processors connected to handle a single, massive workload.

01 Artificial intelligence and machine learning applications

With the deployment of next-generation 5G cellular networks around the world, the related technical theory has been partially successfully translated into productivity after several years of exploration, leading to artificial intelligence becoming ubiquitous. With advances in machine learning and deep learning, AI workloads generate, move, and process massive amounts of data at real-time speeds.

02 The continuous development of cloud computing

Enterprise workloads have migrated to the cloud. In 2017, 45% of workloads were basing on cloud. And more than 60% of workloads migrated to the cloud in 2019. As time goes by, it is certain that more workloads will be cloud-based. Data centers are ramping up hyperscale computing and networking to meet the demands of cloud-based workloads.

03 Virtual reality/augmented reality (VR/AR), self-driving cars are popular research fields

The I/O infrastructure requirements of these applications can be largely summed up as:

Greater I/O interconnection bandwidth to accommodate the collaboration of more accelerators and TPUs;

Faster network protocols to support economies of scale;

Faster and wider channels on the data link, less latency.

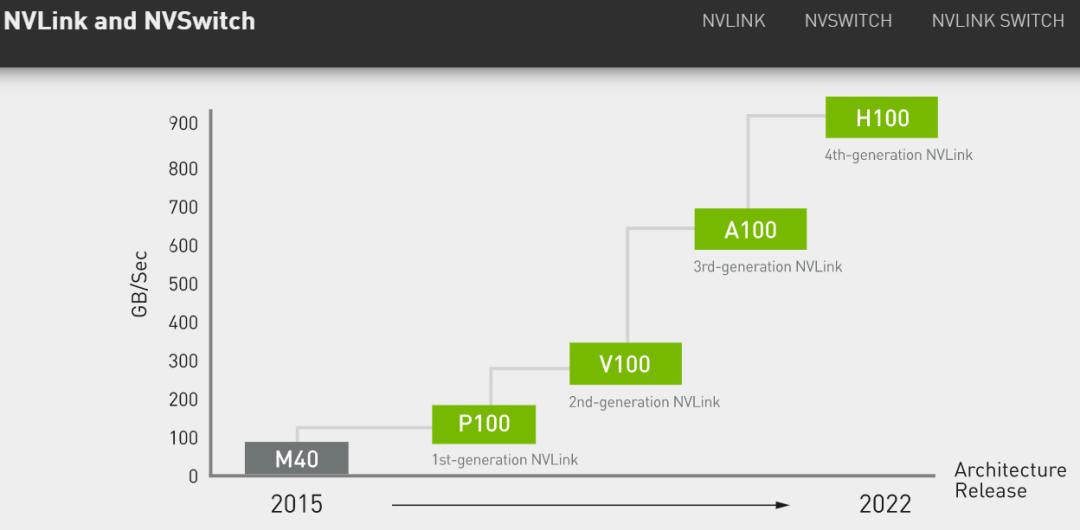

The shortcomings of PCIe 4.0 have actually emerged. For example, NVIDIA innovated NVSwitch in 2018, using its private NVlink protocol to interconnect multiple GPUs. The first generation of NVSwitch is 300GB/s, while the bandwidth of PCIe 4.0 x16 in 2017 is 64GB/s.

Image source: NVIDIA official website

In terms of storage, NMVe continues to upgrade steadily. NVMe-oF adds TCP Transport binding to RDMA binding. This new routing capability significantly enhances the flexibility of hybrid cloud and multi-cloud .

Application requirements – PCIe 4.0 vs 5.0

Combining application requirements with the development of compute units, network units and storage units, the vision of the PCIe 5.0 definition is: “a game-changer for data center/cloud computing, and built-in support for alternative protocols in the future.” PCIe 5.0 features a modified training Sequence (TS1 & TS2), a new field with ID for future alternative protocols and enhanced precoding. This marks the opening of the PCIe standard, allowing future protocols to use some of the proven PCIe firmware and software stacks, and to expand the scope and capabilities of PCIe. In short, the physical layer of future PCIe can accept connections from different protocols. PCIe 5.0 provides high-bandwidth and low-latency connectivity for next-generation applications in artificial intelligence/machine learning (AI/ML), data center, edge, 5G infrastructure and graphics.

The PCIe6 specification was released in January 2022, and its vision is “heterogeneous computing applications, such as artificial intelligence, machine learning and deep learning.” Technically, PCIe 6.0 will be updated from the coding type, number of bits treated per cycle and packet type that have not changed in the past generations. PCIe 6.0 will be a more comprehensive upgrade.

The relatively simple reason is that Intel has accelerated the pace of processor development in order to improve its competitiveness, including the breakthrough of manufacturing process bottlenecks (the relatively slow progress of manufacturing process leads to the delay in the launch of new cores, which is also one of the reasons why Intel platform is late in the era of PCIe 4.0).

PCle 5.0 is important for the development of PCle

The relatively deep-seated reason is that PCIe 5.0 is an important watershed in the development of PCIe. It is not only the end of one era, but also the beginning of another era.

The so-called end means that the frequency of PCIe has been nearly doubled in previous generations. In PCIe 5.0, the cost of maintaining signal integrity and reducing loss has reached a high point (there will be further analysis in future articles). To control the cost for signal quality assurance, we need to change our thinking.

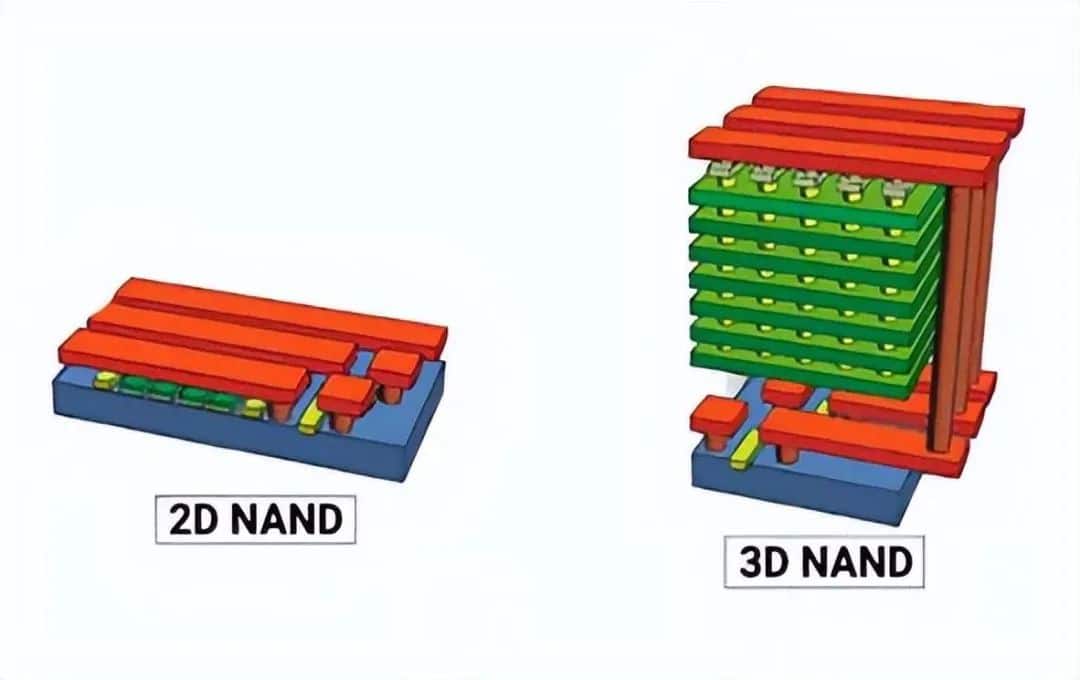

An available approach is changing the modulation method (such as for PCIe 6.0 changing from NRZ to PAM4 pulse modulation). Or it is reducing the number of channels for a device. Since the bandwidth of PCIe 5.0 x4 is equivalent to PCIe 3.0×16, some future devices can use fewer channels. For example, the 25/40Gb network card can use x4 channels, and the graphics card can use x8 or even x4 channels. This is conducive to reducing the complexity of the controller and the size of the connector. x4 channel will be a standard interface form for many devices including SSD. The performance/cost positioning of most devices may no longer be distinguished by x4, x8, or x16, but by PCIe versions.

A new era of CXL

Now that a new era has begun , CXL (Compute Express Link) has emerged. CXL is an open interconnection protocol, Intel launched it in 2019. In an attempt to achieve high-speed and efficient interconnection among CPU, GPU, FPGA, other accelerators can meet high-performance heterogeneous computing. The point is: the interface specification of the CXL standard is compatible with PCIe 5.0. Therefore, investing in PCIe 5.0 as soon as possible is equivalent to build a road for the popularization of CXL. In addition, CXL can also change the traditional form of accelerator and memory (internal card). Meanwhile, it makes it a more maintainable module. Well, smart readers may have already thought that front-end, hot-plugging is feasible, we have mentioned the EDSFF in the previous article?

That’s right. We have explained that EDSFF is not only for SSD, but also for memory devices, accelerator cards, etc. In terms of appearance alone, EDSFF is one of the few redesigned structures in the x86 world that emphasizes compatibility and inheritance in recent decades, without the historical burden of inheriting any modules (for example, U.2 has inherited the form of the 2.5-inch hard disk). It is completely optimizing for the size of 1U and 2U chassis. Moreover its deployment density and heat dissipation efficiency far exceed the old 2.5 and 3.5-inch modules. Furthermore, the combination of PCIe 5.0/6.0, CXL and EDSFF is enough to revolutionize the deployment efficiency of racks. This is the evolution from “can do it” (with enough bandwidth) to “do it well”: first meet basic needs, and then optimize in a targeted manner to trigger evolution.

PCIe 4.0 vs 5.0 Conclusion:

Looking back at the development of the bus, the upgrade of each generation of PCIe depends on “whether there is a need”. The upgrade cycle was long was because the infrastructure bottleneck faced by the applications. At that time, it was not on the system bus. That the upgrade cycle was short because the bus at that time could not meet the needs of the rapidly developing applications. Demand drives technological development, and technology opens up new demand— this is a pattern that existed before and will continue to be there in the future.

Leave a comment